The FDA outlines draft guidance on AI for medical devices

The agency also published draft guidance on the use of AI in drug development

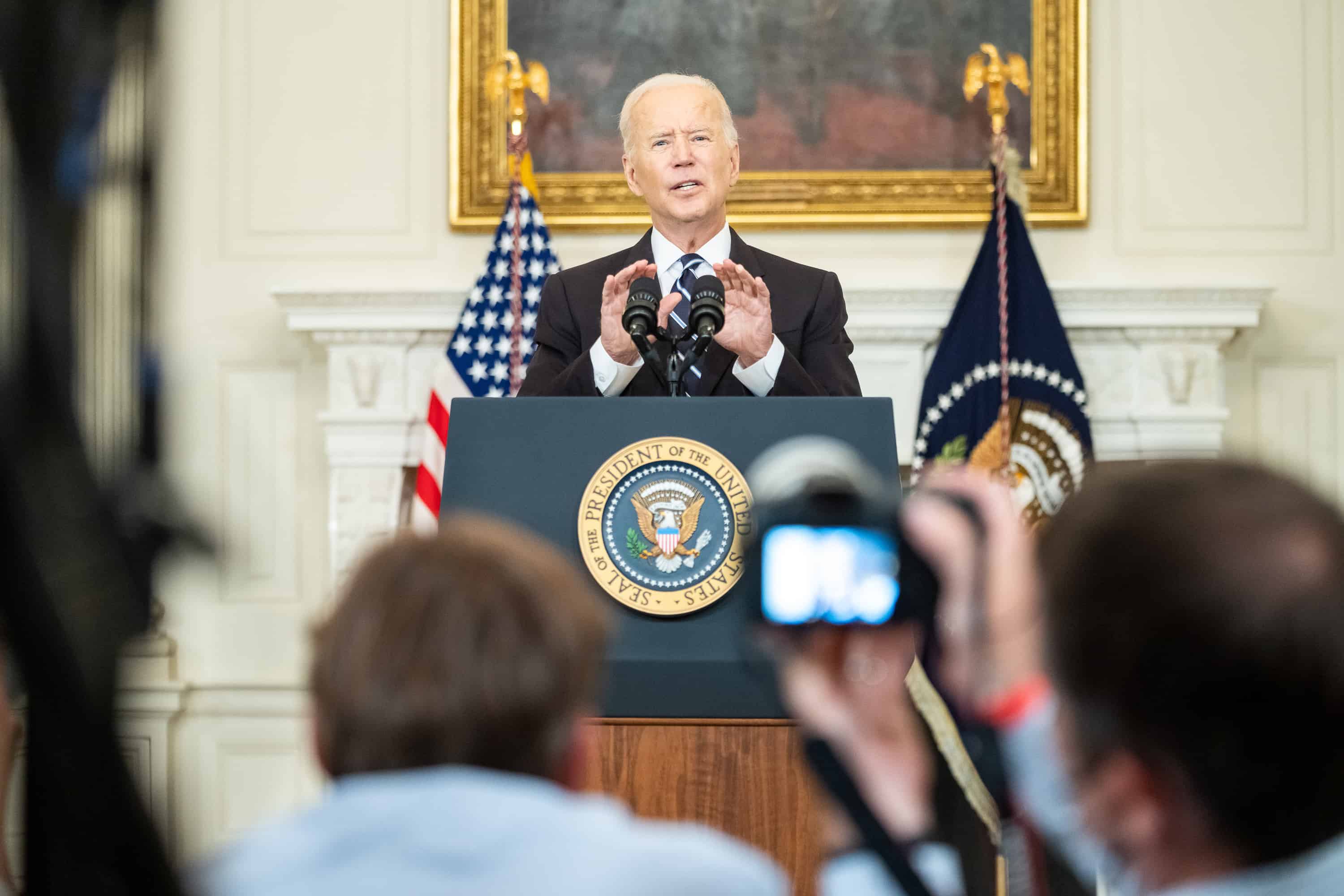

Read more... The Biden administration has issues a number of orders and guidelines around how artificial intelligence should be deployed. That included issuing an Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence, directing action to strengthen AI safety and security, while also protecting the privacy of Americans. Earlier this month, the administration revealed new guidelines for agencies when it comes to buying AI, while also establishing teams to work together on informing future AI policy.

The Biden administration has issues a number of orders and guidelines around how artificial intelligence should be deployed. That included issuing an Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence, directing action to strengthen AI safety and security, while also protecting the privacy of Americans. Earlier this month, the administration revealed new guidelines for agencies when it comes to buying AI, while also establishing teams to work together on informing future AI policy.

Now the President Biden has unveiled the first-ever National Security Memorandum (NSM) on AI, directing the U.S. Government to implement what it calls "concrete and impactful steps" to ensure that the United States not only leads the world’s development of AI, but that it's used responsibly in the realm of national security.

The NSM includes directions to improve the security and diversity of chip supply chains with AI in mind, while also providing AI developers with cybersecurity and counterintelligence information so they can keep their inventions secure. It also formally designates the AI Safety Institute as U.S. industry’s primary port of contact in the U.S. Government, and provides mechanisms for the Institute to partner with national security agencies, including the intelligence community, the Department of Defense, and the Department of Energy.

In addition, the NSM directs the National Economic Council to coordinate an economic assessment of the competitive advantage of the United States private sector AI ecosystem.

Other goals of the NSM is to harness AI technologies to advance the U.S. Government’s national security mission and to advance international consensus and governance around AI, which means directing the U.S. Government to collaborate with allies and partners to establish a "stable, responsible, and rights-respecting governance framework to ensure the technology is developed and used in ways that adhere to international law while protecting human rights and fundamental freedoms."

The NSM also directs the creation of a Framework for AI Governance and Risk Management for National Security, which provides further detail and guidance to implement the NSM, including requiring mechanisms for risk management, evaluations, accountability, and transparency.

For example, it declares that agencies cannot use AI "in any manner that violates domestic or international law obligations and shall not use AI in a manner or for purposes that pose

unacceptable levels of risk."

For example, agencies are prohibited from using AI to profile, target, or track activities of individuals based solely on their exercise of rights protected under the Constitution and U.S. law, including freedom of expression, association, and assembly rights.

They also cannot unlawfully disadvantage an individual based on their ethnicity, national origin, race, sex, gender, gender identity, sexual orientation, disability status, or religion.

Perhaps most importantly, it prohibits the removal of a human “in the loop” for actions critical to informing and executing decisions by the President to initiate or terminate nuclear weapons employment.

"The NSM is designed to galvanize federal government adoption of AI to advance the national security mission, including by ensuring that such adoption reflects democratic values and protects human rights, civil rights, civil liberties and privacy," the White House wrote in a press release.

"In addition, the NSM seeks to shape international norms around AI use to reflect those same democratic values, and directs actions to track and counter adversary development and use of AI for national security purposes."

(Image source: whitehouse.gov)

The agency also published draft guidance on the use of AI in drug development

Read more...The biggest focus areas for AI investing are healthcare and biotech

Read more...It will complete and submit forms, and integrate with state benefit systems

Read more...